AI and harm

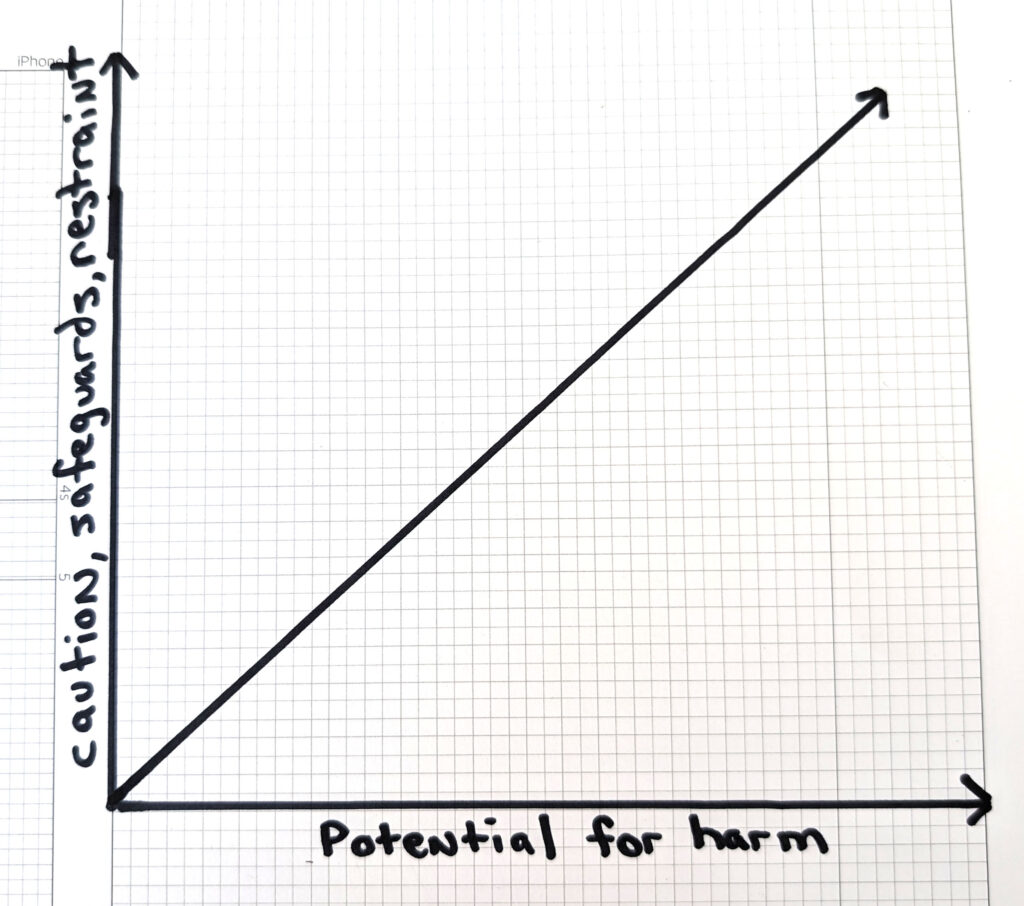

Every time I read about how is being applied in the world, this graph immediately pops up in my head:

As the potential to do harm increases, there must be a corresponding increase in the level of caution, skepticism, and restraint. More controls, redundancies, guardrails, and regulations need to be put in place as the stakes get higher.

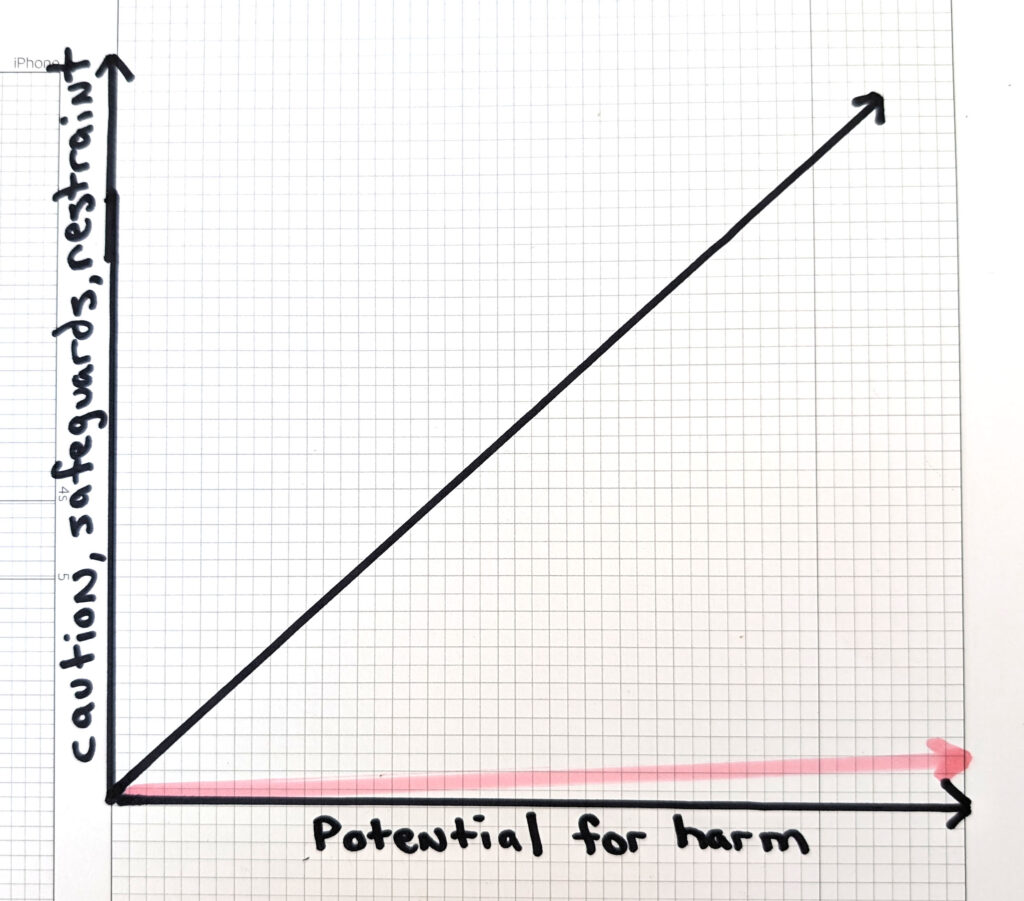

What’s alarming — yet sadly not at all surprising — is that this doesn’t appear to be playing out in reality. Reality looks something more like this:

In case after case, the fervor and urgency to adopt AI seems to stomp all over the need to exercise caution, responsibility, and to establish critical safeguards that curtail harm.

I haven’t been able to shake the extraordinarily disturbing news that the Israel’s Lavender AI system was (is?) used to determine bombing targets — with little more than a “rubber stamp” from human intelligence officers — that resulted in many civilians being killed. We’re not talking about potential harm here; this is explicitly AI determining which human beings to kill. This level of recklessness is appalling, but unfortunately it’s not an isolated story.

AI is swiftly being woven into many facets of society: education, healthcare, criminal justice systems, hiring, etc that all have immense impact on people. For each and every AI application, we must ask “what harm can this do?”, answer that question honestly and thoroughly, then exercise a level of caution and establish safeguards that are proportional to the amount of harm that can be done.